AI & Beauty

Beauty is in the eye of the *human* beholder.

Below is a piece from Nathan Labenz about attempting to quantify beauty with AI, and what he’s learned watching the filmmakers at Waymark wrestle with generative AI while creating The Frost . For context, aside from hosting The Cognitive Revolution, Nathan is a co-founder of Waymark, which makes advertising and marketing videos for small businesses.

A quick note - just wanted to share that the Turpentine podcast network officially launched this past week, of which The Cognitive Revolution podcast is proudly a part of. To learn more, subscribe to their Substack Turpentine .

The Cognitive Revolution podcast launched in February. We've released 34 episodes with outstanding guests, and just hit a new high as the #15 Technology Podcast in America. Quick link to subscribe here.

Be sure to check out the rest of Turpentine’s podcasts — where experts talk to experts — at turpentine.co, including a newly launched show about the business of media called Media Empires.

Now back to Nathan’s essay on AI and beauty.

Some background on Waymark:

Business-wise, Waymark partners with leading TV companies and other ad platforms. Spectrum Reach was an early, visionary customer. Since launching our generative AI product experience in January, we've been going viral among small business owners, gradually but globally, with a couple multi-million-view TikToks bringing in upwards of 1000 new free trial users / day.

One secret to our success, even pre-AI, has been our "business profile builder", which auto-imports a small business' existing content from eg FB, GOOG, and their business website in ~15s It's super convenient, but poses a number of practical challenges

Benchmarking Beauty

Many imported images are simply not good enough for marketing. Here are a few, selected randomly from our database You can see the challenge of such variable quality, and understand how it drove the creators at Waymark crazy

For years we dreamed of AI that could understand images well enough to allow us to automatically complement a video script, but the best AI could do was image tagging, which would get you lists like ["man", "woman", "chair", "table"] – accurate, but not very helpful

For lack of viable AI, we created a number of filters to eliminate the worst images - minimum file size, - aspect ratio requirements - OCR - home grown "complexity" scores This took out the garbage, but as of 2020, we had no way to determine scene content or assess beauty

OpenAI's CLIP, published Jan 2021, changed what was possible in image understanding. It used a web-scale dataset to connect high-dimensional text and image latent spaces for the first time.

Now, we could reliably determine whether an image was "a tv studio" or "a conference room" instead of the old ["man", "woman", "chair", "table"] By open-sourcing CLIP, OpenAI seeded the entire text-to-image and text-to-everything revolution we've seen over the last two years.

But CLIP, as it turns out, has a very weak conception of beauty. Because the captions it was trained on describe, but only rarely evaluate, the photos, it can't reliably tell what's appealing and what's not. This remains true of most image understanding datasets and models today.

Thank you Omneky for sponsoring The Cognitive Revolution. Omneky is an omnichannel creative generation platform that lets you launch hundreds of thousands of ad iterations (that actually work) customized across all platforms. Show the perfect ad tailored for each customer. Omneky combines generative AI and real-time advertising data. Mention "Cog Rev" for 10% off.

The core challenge, I learned, is not just about the nature of the dataset, but the nature of beauty itself.

It turns out that beauty is truly – quantifiably – subjective. Give people a set of images and ask them to evaluate them for beauty, and you will get a LOT of disagreement.

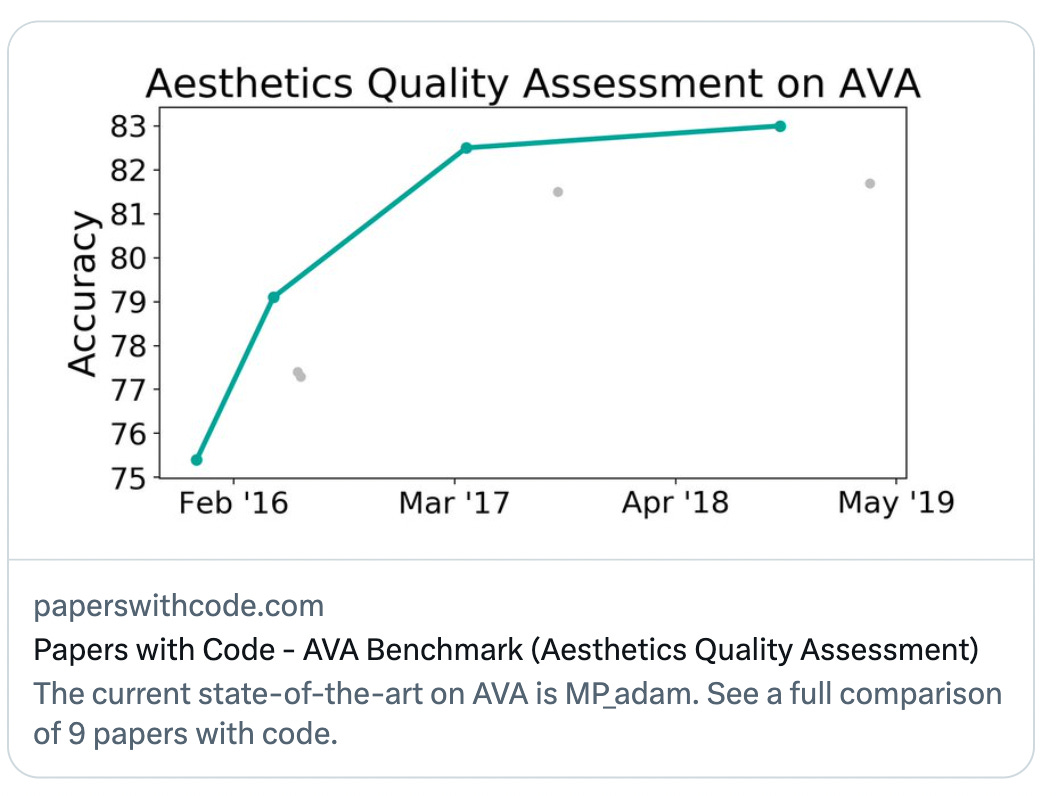

The canonical paper/dataset on this problem is AVA. It contains 250K images, with a whopping 210 aesthetic evaluations each. Why so many? Look at how variable the scores are. The most popular score for an image gets <40%, and 5 different scores get 10%+

A number of people did train models on this dataset, but the trail goes a bit cold as of 2019. My theory is that obvious noise in the dataset, while an honest look at reality, made this an unappealing challenge, and people focused on other things.

We tried several of these models on our own small business dataset in early 2022, but results were not encouraging. They correlated well with one another, but not with our human evaluations. R2 values all <0.15.

We tried the @laion_ai Aesthetics model, a project that HuggingFace and Stability AI funded to extend the AVA dataset and better support image generation projects – to the same result.

Finally, we tried Everypixel. They collected 347K IG photos, hired professional photographers to rate them on aesthetic & technical merits, asking evaluators NOT to consider the attractiveness of any people or products in the photo. Still… R2s were all super low

To give you a super concrete sense, this image got a 1.5/10 from Everypixel, a 7.5/10 from LAION, and 4.9/10 on one of the original AVA models, and a 2/5 from our human rater.

How would you score this image for beauty?

All that said, there is some useful signal If you sort the images by aesthetic score, you will be able to tell which are the highest-rated images and which are the lowest. But in the middle, and really for any similar-score images, there's no rhyme or reason to the ranking.

This, then, is the perhaps surprisingly sad state of the art in AI for visual aesthetic judgments. We can enrich the quality of the sample by eliminating the bottom X% from consideration. If anyone can do much better on a super-diverse UGC dataset, I would love to hear about it!

So, what does all this mean for art & film-making in the generative AI era? First, there are a huge number of AI generations, and many hours, behind the AI artwork you see. Waymark's Creative Director has been hammering away with DALLE2 for a year

This is not just anecdotal to Waymark. @Suhail told me in the first episode of @cogrev_podcast that he set the Playground AI free tier limit at 1000 images/day because a full 10% of users were already doing more than that at the time.

Second, with current tools, conceptual mastery and sophisticated vocabulary are critical. Many are eager to dismiss "prompt engineering" but I think the reality is that to direct creators, whether human or AI, you need a high-resolution vocabulary:

Cultural literacy is also super important "Everything's a remix", Stephen Parker and I have said to one another many times. But at the same time culture movies fast, and foundation models, as they exist today, are inherently behind.

Third, you need a vision to get anywhere exciting. Today's visual asset generation AIs can show flashes of artistic brilliance, just as the latest large language models show sparks of artificial intelligence But just like GPT-4 still can't do science…

Even the best creative AIs can't sustain a creative vision to develop something like The Frost on its own. Check out this behind the scenes video describing how The Frost was made – with a ton of creative intention, strategy, and experimentation.

The Waymark team talks about keeping "Humans at the Helm" of creative projects. With current technology, it's the only way. But in keeping with our core values, I would emphasize that the current arrangement should not be taken for granted.

If there's one thing I've learned over the last two years of intensive study of AI, it's that just because AI can't do something today, doesn't mean that it won't soon.

It wouldn't shock me if Yann LeCun and Meta AI released a model with unprecedented aesthetic sophistication any day.

This, in combination with AI's near-mastery of language, which Yuval Harari calls the "master key" to "all of our institutions", does raise the prospect that non-human systems could soon be shaping human culture more than we humans ourselves

Hollywood writers are already organizing around this. The more I study the situation, the more sympathetic I am to cultural protection for humans. Not by restricting use of AI tools, but by reserving some roles for humans. It's about more than money.

Which brings me to one final fun fact about The Frost. While the imagery was created with DALLE2, the script itself was written entirely by @bigkickcreative and team Why? Mainly because he loves writing scripts, but also to serve as an example of keeping humans at the helm.

Catch up on recent episodes of The Cognitive Revolution:

Where are the Moats in AI (Spotify | Apple | Youtube) where Erik Torenberg and Nathan Labenz analyze the moats of the most powerful companies in AI (building on this substack post and Twitter megathread from Nathan Labenz)

The Tiny Model Revolution with Ronen Eldan and Yuangzhi Li of Microsoft Research (Spotify | Apple | Youtube). Ronen and Yuangzhi discuss the small natural language dataset they created called TinyStories. Tiny Stories is designed to reflect the full richness of natural language while still being small to support research with modest compute budgets. Using this dataset, they began to explore aspects of language model performance, behavior, and mechanism by training a series of models that range in size from just 1 million to a maximum of 33 million parameters – which is still just 2% the scale of GPT-2.

The Consumer Rights Revolution with Joshua Browder, CEO of DoNotPay (Spotify | Apple | Youtube). This is a wide ranging, fascinating discussion about the current state of AI use in law, what policymakers should consider in regulating AI, and the ethics of robo-lawyers for consumer use.

Until next time,