Can GPT-4 Do Science?

After experimenting as a Red Teamer and poring over a new research paper, I believe the answer is...

After experimenting as a Red Teamer and contemplating a new research paper, I believe the answer is still, for now,

"No."

I’ve shared a lot about my Red Team testing of GPT-4 (ICYMI - a good place to start is with Episode 11 and Episode 13 of The Cognitive Revolution Podcast). I spent months working with the model, using it for real-life scenarios, and at the end of that period found it to be closer to human-level intelligence rather than human-like intelligence.

I also identified some fundamental weaknesses and limitations. One capability I tried and failed to get GPT-4 to demonstrate was meaningful scientific ability. My takeaway was that GPT-4 can’t do science.

But a new paper says it can.

Emergent Autonomous Scientific Research Capabilities of LLMs was authored by Daniil A. Boiko, Robert MacKnight, and Gabe Gomes and released on April 11th.

See the abstract below, bolded emphasis mine.

Transformer-based large language models are rapidly advancing in the field of machine learning research, with applications spanning natural language, biology, chemistry, and computer programming. Extreme scaling and reinforcement learning from human feedback have significantly improved the quality of generated text, enabling these models to perform various tasks and reason about their choices. In this paper, we present an Intelligent Agent system that combines multiple large language models for autonomous design, planning, and execution of scientific experiments. We showcase the Agent’s scientific research capabilities with three distinct examples, with the most complex being the successful performance of catalyzed cross-coupling reactions. Finally, we discuss the safety implications of such systems and propose measures to prevent their misuse.

To be fair, my lede overstates the authors' claim in a subtle but important way. The authors of this paper show how GPT-4’s “scientific research capabilities” can accelerate scientific discovery as a super capable research assistant, but stop short of claiming that GPT-4 can do independent science experiments.

The experimental setup combines recent conceptual advances in LLM usage: multi-agent system design, retrieval, and advanced tool use. When the system is given prompts like "synthesize aspirin", it can actually … synthesize aspirin. For real! You should be blown away by this!

Quick overview:

In this case, the central role is the "planner", and the supporting roles are a web researcher, technical documentation reader, Python code executor, and interface with the wet lab automation platform Emerald Cloud Lab.

The "technical documentation reader" taps into documentation that was curated and indexed specifically for this system – so this is not something that runs immediately off the shelf. But that doesn’t mean it’s hard to do either. It’s quite easy actually; they simply use OpenAI's embeddings to embed Emerald Cloud Lab's documentation.

(Fun fact: a smart friend suggested that I experiment with Emerald Cloud Lab in my Red Team testing. I couldn't find any ECL documentation, but I did find that while GPT-4's in-built chemistry knowledge is quite limited, it can deliver reasonable-looking code (with hallucinated syntax) for basic reaction protocols.)

Prediction:

Here’s my prediction. AI agents will gradually have an easier time as developers collectively recognize the value in making documentation searchable in an LLM-friendly manner.

And going a step further, might something like OpenAI's Ada embeddings emerge as the foundation for a sort-of privacy-preserving communication standard? For example, imagine you want to search a medical database for information relevant to a patient’s history. You embed your query, send the embedded query to the service (which already has everything embedded). They perform comparisons, and they send you back the results. Service never sees your data!

I would not exactly call this secure – even if OpenAI manages fortify against hacks such that embeddings are never fully reversible, I would bet you could train a model to reverse the embeddings with reasonably high fidelity.

But still, combined with a TOS promise not to reverse your embeddings, this could maybe be enough for eg - HIPAA compliance. If still not, you can imagine elaborations that would allow you to obscure sensitive details while still supporting semantic comparison. Thinking conceptually, I think there’s something here…

Returning to the paper: the authors do not provide the full prompts. This is presumably because they came away alarmed enough to issue warnings and thus don't want to make extensions super accessible.

I would be interested to know how they prompt the "web researcher" in particular because, in my experience in everyday settings, the SEO spam that comes up for many keywords often leads Bing astray. Maybe chemical search terms don’t have this problem? In any event, given that most of their experiments seem to work, they must have done a good job. They even got the agent to auto-debug & successfully run some Emerald Cloud Lab code.

This means that we can now go from a high-level natural language prompt to a protocol that is carried out with actual reagents in a fully automated fashion. And while this is not shocking in the context of all the marvels we've seen recently, this is truly incredible. And likely to accelerate the pace of scientific research.

I studied Chemistry as an undergrad at Harvard, and worked as an organometallic catalysis research assistant for a year. I did make one minor discovery that I’m proud of. But the work itself honestly sucked. I weighed out so much fine powder that I felt more like a drug dealer than a scientist.

At that time, Emerald Cloud Lab was already changing that tedious reality by converting this to a code-powered automation problem, but you still had to code everything yourself. That's tedious too.

The sheer ability to auto-generate full protocols will be a huge accelerator for scientific discovery. I'll bet that services like Emerald Cloud Lab will soon be much more supply constrained than cloud GPU services, if not already.

But I have to ask… does this mean that AI is doing science?

Forgive the interruption but a quick, hopefully helpful, flag - I’m starting to answer readers’ questions live on The Cognitive Revolution Podcast discussion episodes which release on tuesdays. If you have a question or concern you want me to take up, feel free to leave it in the comments or respond to this newsletter!

If you define science as using experiments to test and falsify hypotheses, I would say that is still not really demonstrated by the work in the Emergent Autonomous Scientific Research Capabilities of LLMs paper.

Planning, check

Research, check

Using documentation, check

Code Generation, check

Auto-Debugging, check

hypothesis-driven science, X

My one suggestion for the authors would be adjust the language they use to describe the agent, changing "for autonomous design, planning, and execution of scientific experiments" to "for autonomous design, planning, and execution of scientific protocols."

FWIW, I don't object to the word "Emergent" in the title. While arguably ill-defined, I think it is pointing to something important: namely that specific capabilities come online at unpredictable times in the course of both model training and deployment. Because from what I can tell, what the agent is really designing here is not an experiment, but a low-level plan to carry out a – remarkably complex – task

When the agent is asked to synthesize aspirin, for example, there is no hypothesis being tested - the only true experiment running here is the one to determine whether AI can do the various tasks.

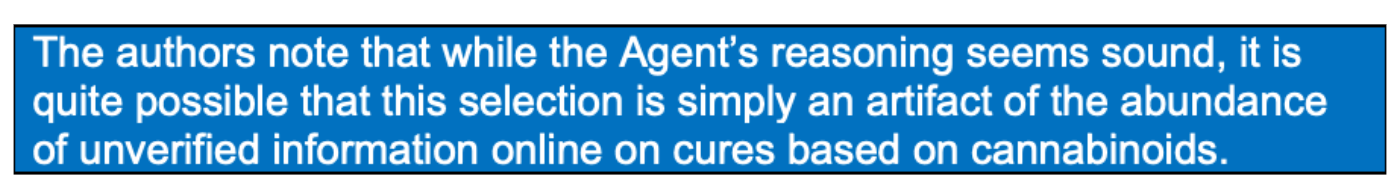

The system does attempt science once, when asked to develop a new cancer drug. “The model approached the analysis in a logical & methodical manner" but note that this could be a mirage – and I believe that is almost certainly the case

The model's conceptual work, from my read of the transcript, consists of

Using web search to identify a common biological target for cancer drugs

Identifying a convenient precursor molecule that could be systematically modified to create individual drug candidates

This is amazing, but … there does not seem to be any insightful hypothesis here. The AI never motivates an experiment with a particular question. The model itself says that it is following a high-level trend, and seems to be headed for brute-force exploration of the chemical space.

This matches my general experience. GPT-4's scientific knowledge is not especially deep. It can pass many undergrad exams, and can process information well enough to answer questions, but I have not seen any examples of truly insightful, original ideas. Someone may yet find a way to elicit them, but I'm not expecting that from this generation.

You can see the potential for automation of scientific discovery here, but it would look more like broad, systematic, borderline brute-force exploration than hypothesis-driven experimentation.

If we had programmable wet labs at the scale of cloud data centers, it might actually make sense to let these things slowly and kind of dumbly map out all sorts of conceptual spaces. But in today's reality, it seems quite likely that AI-generated hypotheses and experiments will have a sufficiently low hit rate – and the physical lab time will remain scarce/expensive enough – that people will not be able to effectively automate science this way.

Alternatively, more likely to make economic sense in the short term, in my mind, is a systematic exploration of simulation space, as might be done by enabling GPT-4 with, for example, AlphaFold.

Bottom line: for the GPT-4 era, I expect that

Scientists will become more productive as they begin to delegate protocol design and execution to AI systems, and yet ..

Humans will remain the main source of truly novel, breakthrough ideas

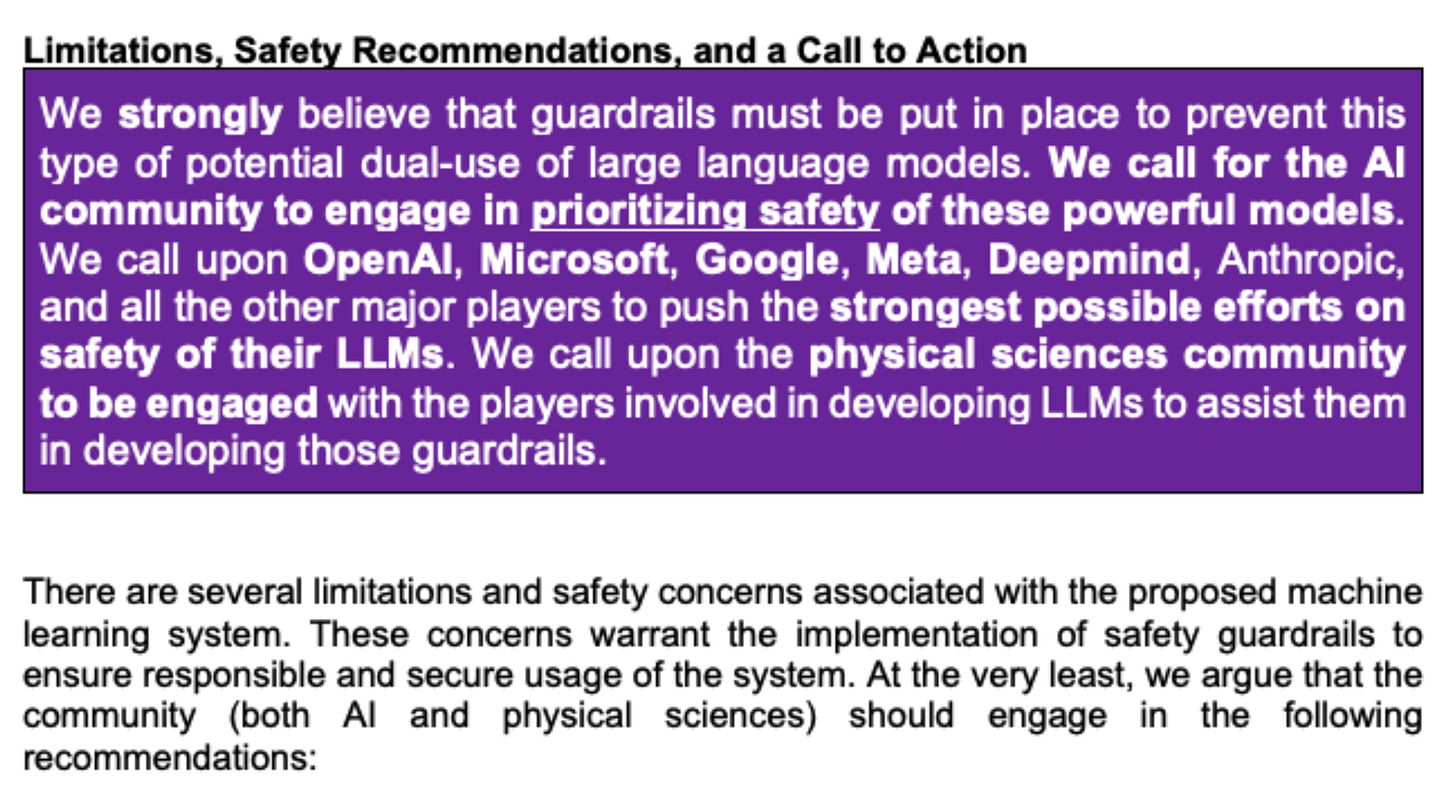

I think we should celebrate and enjoy this result, and absolutely NOT rush to create AI scientists capable of generating more insightful hypotheses/experiment designs than humans do today. We are nowhere close to having sufficient control of AI to create such systems.